Adaptive compression of animated meshes by exploiting orthogonal iterations

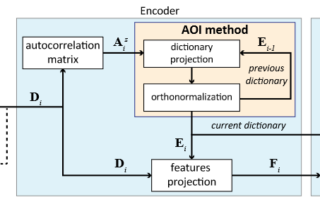

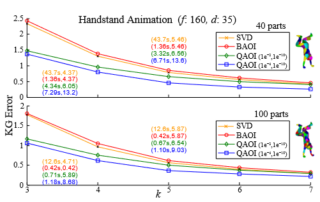

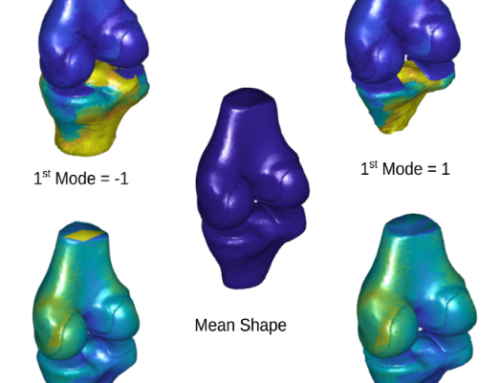

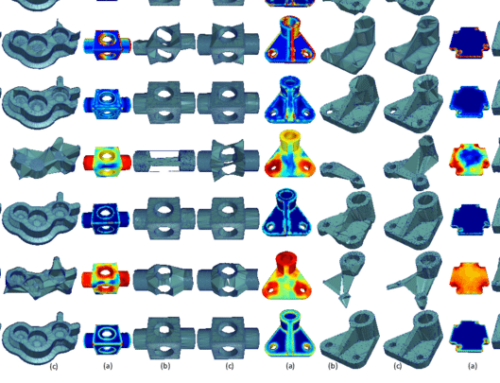

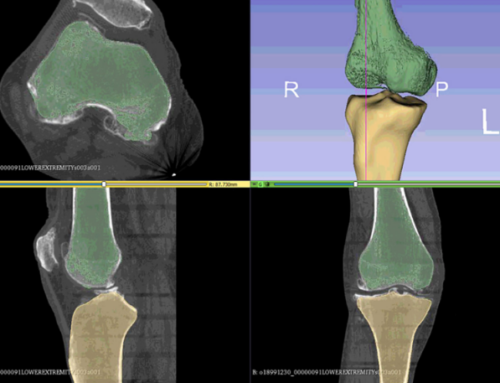

We introduce a novel approach to support fast and efficient lossy compression of arbitrary animation sequences ideally suited for real-time scenarios, such as streaming and content creation applications, where input is not known a priori and is dynamically generated. The presented method exploits temporal coherence by altering the principal component analysis (PCA) procedure from a batch- to an adaptive-basis aiming to simultaneously support three important objectives: fast compression times, reduced memory requirements and high-quality reproduction results. A dynamic compression pipeline is presented that can efficiently approximate the k-largest PCA bases based on the previous iteration (frame block) at a significantly lower complexity than directly computing the singular value decomposition. To avoid errors when a fixed number of basis vectors are used for all frame blocks, a flexible solution that automatically identifies the optimal subspace size for each one is also offered. An extensive experimental study is finally offered, showing that the proposed methods are superior in terms of performance as compared to several direct PCA-based schemes while, at the same time, achieves plausible reconstruction output despite the constraints posed by arbitrarily complex animated scenarios

Aris S. Lalos, A. A. Vasilakis, A. Dimas, K. Moustakas, “Adaptive Compression of Animated Meshes by Exploiting Orthogonal Iterations”, The Visual Computer (Springer), 33(6-8): 811-821 (2017)