3D content-based search using sketches

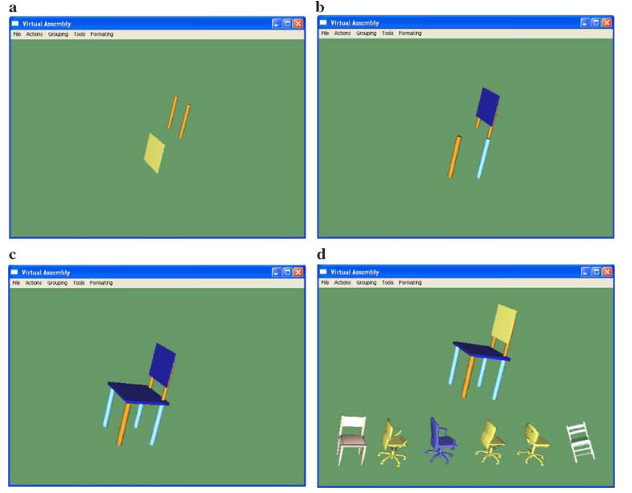

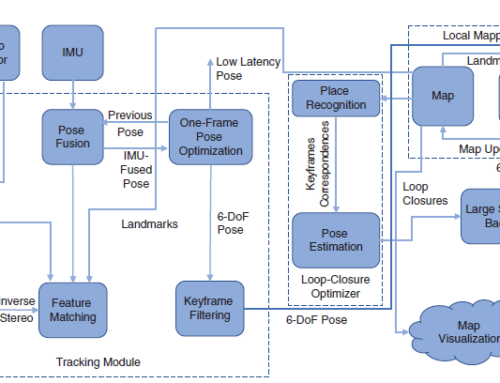

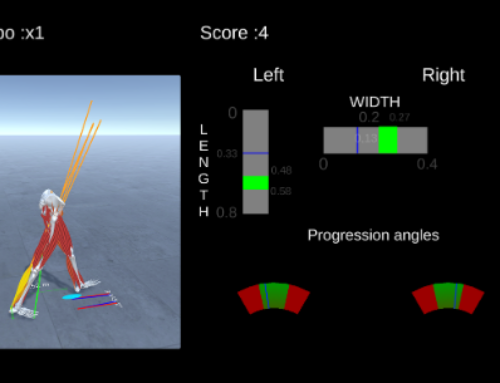

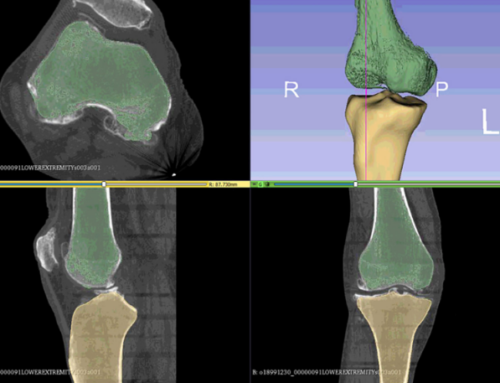

This paper presents a novel interactive framework for 3D content-based search and retrieval using as query model an object that is dynamically sketched by the user. In particular, two approaches are presented for generating the query model. The first approach uses 2D sketching and symbolic representation of the resulting gestures. The second utilizes non-linear least squares minimization to model the 3D point cloud that is generated by the 3D tracking of the user’s hands, using superquadrics. In the context of the proposed framework, three interfaces were integrated to the sketch-based 3D search system including (a) an unobtrusive interface that utilizes pointing gesture recognition to allow the user manipulate objects in 3D, (b) a haptic–VR interface composed by 3D data gloves and a force feedback device, and (c) a simple air–mouse. These interfaces were tested and comparative results were extracted according to usability and efficiency criteria.

K. Moustakas, G. Nikolakis, D. Tzovaras, S. Carbini, O. Bernier, J.E. Viallet, “3D content-based search using sketches”, Springer International Journal on Personal and Ubiquitous Computing, vol.13, no.1, pp. 59-67, January 2009.)