Haptic Access to Conventional 2D Maps for the Visually Impaired

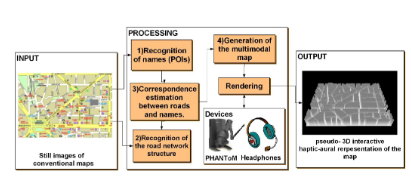

This work describes a framework of map image analysis and presentation of the semantic information to blind users using alternative modalities (i.e. haptics and audio). The resulting haptic-audio representation of the map is used by the blind for navigation and path planning purposes. The proposed framework utilizes novel algorithms for the segmentation of the map images using morphological filters that are able to provide indexed information on both the street network structure and the positions of the street names in the map. Next, off-the-shelf OCR and TTS algorithms are utilized to convert the visual information of the street names into audio messages. Finally, a grooved-line-map representation of the map network is generated and the blind users are able to investigate it using a haptic device. While navigating, audio messages are displayed providing information about the current position of the user (e.g. street name, cross-road notification and so on). Experimental results illustrate that the proposed system is considered very promising for the blind users and has been reported to be a very fast means of generating maps for the blind when compared to other traditional methods like Braille images.

K. Kostopoulos, K. Moustakas, D. Tzovaras, G. Nikolakis, C. Thillou and B. Gosselin, “Haptic Access to Conventional 2D Maps for the Visually Impaired”, International Journal on Multimodal User Interfaces, vol. 1, no. 2, pp. 13-19, July, 2007.