3D point clouds are a versatile and powerful format for describing 3D data. They юnd applications in various юelds, such as robotics, autonomous vehicles, and augmented reality. Generating point clouds from partial views of objects is a crucial task for these applications, as it allows for the creation of complete 3D

representations from limited sensor data. Point cloud generation is also useful for enriching datasets with high-quality synthetic data, which can be difficult to

obtain otherwise.

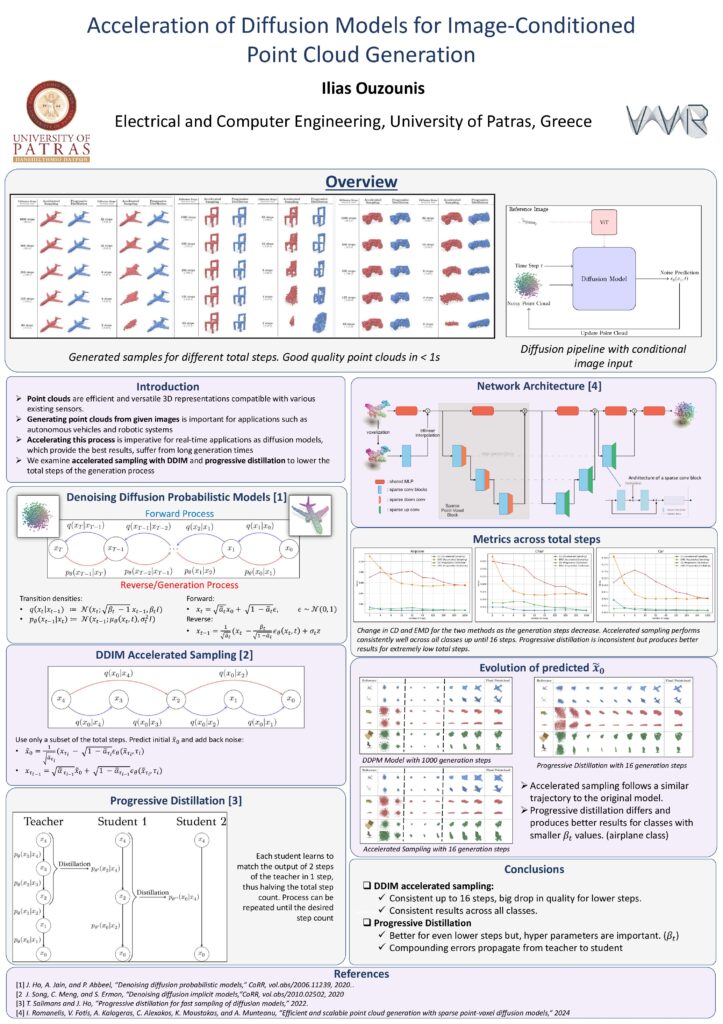

Various methods exist for generating point clouds, with diffusion models being the state-of-the-art approach in terms of quality and юdelity. However, their slow generation times make them unsuitable for real-time applications. While acceleration strategies have been developed for diffusion models in the image domain, they have not been fully explored in the 3D point cloud domain.

In this thesis, we present a sparse voxel diffusion model architecture that can generate point clouds conditioned on an input image and explore the potential

of known acceleration strategies to improve the generation speed. We compare the generation speed and quality of accelerated sampling via DDIM scheduling

and progressive distillation of the original trained model on various step counts. Our results demonstrate good quality generation for low step counts, achieving

a speedup of 30× compared to the original model.

Full Thesis: here